Effective and Efficient Reinforcement Learning Based on Structural Information Principles (SIDM)

Submited IEEE TPAMI in 2023.5

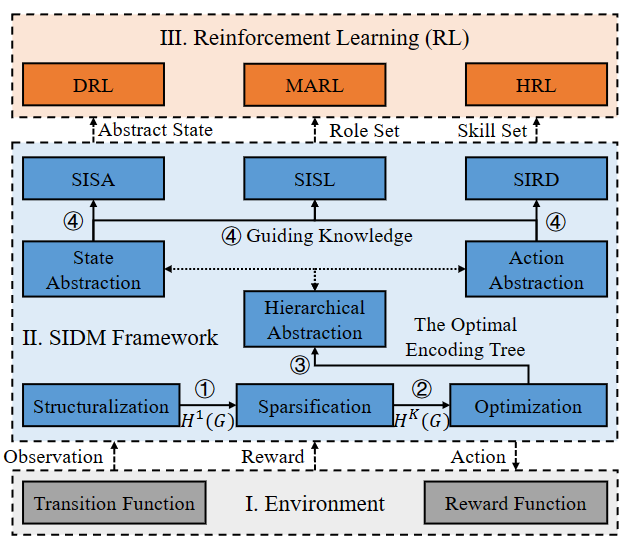

TL;DR We propose a new, unsupervised, and adaptive Decision-Making framework called SIDM for Reinforcement Learning. This approach handles high complexity environments without manual intervention, and increases sample efficiency and policy effectiveness.

Abstract Reinforcement Learning (RL) algorithms often rely on specific hyperparameters to guarantee their performances, especially in highly complex environments. This paper proposes an unsupervised and adaptive Decision-Making framework called SIDM for RL, which uses action and state abstractions to address this issue. SIDM improves policy quality, stability, and sample efficiency by up to 18.75%, 88.26%, and 64.86%, respectively.

Approach The SIDM framework includes structuralization, sparsification, optimization, and hierarchical abstraction modules. We construct homogeneous state and action graphs, minimize structural entropy to generate optimal encoding trees, and design an aggregation function for hierarchical state or action abstraction. Based on the hierarchical abstractions, we calculate tree node representations to achieve state abstraction in DRL, role discovery in MARL, and skill learning in HRL.

| Task | SIDM | SAC | SE | HIRO | HSD |

|---|---|---|---|---|---|

| Hurdles | |||||

| Limbo | |||||

| Pole Balance | |||||

| Hurdles Limbo | |||||

| Stairs |